Researchers at Children’s Hospital of Philadelphia (CHOP) announced the creation of a new AI technology called CelloType, a comprehensive model designed to more accurately identify and classify cells in high-content tissue images. The findings were published today in the journal Nature Methods.

Spatial omics is a field of study that combines molecular profiling, such as genomics, transcriptomics or proteomics, with spatial information to map where different molecules are located within cells in complex tissues. It provides important, detailed insights into how a disease develops and progresses at the cellular level, aiding the advancement of precise diagnostics and targeted treatments, a major focus of CHOP’s translational research. This process allows researchers to study a broad range of complex diseases, such as cancer and chronic kidney disease, by revealing how cellular interactions and microenvironments contribute to disease progression and therapy response. As the critical first step of spatial omics data analysis, researchers undertake the tasks of cell segmentation (identifying cell boundaries) and classification (calling cell types).

Recent advancements in spatial omics technologies have led to analysis of intact tissues at the cellular level, allowing for unparalleled insights into the link between cellular architecture and functionality of various tissues and organs. Along these lines, CHOP is currently a collaborator in high profile projects such as the Human Tumor Atlas Network, the Human BioMolecular Atlas Program (HuBMAP), and the BRAIN initiative, which use similar technologies to map spatial organizations of various types of healthy and diseased tissues.

“We are just beginning to unlock the potential of this technology,” said Kai Tan, PhD, the study’s lead author and a professor in the Department of Pediatrics at CHOP. “This approach could redefine how we understand complex tissues at the cellular level, paving the way for transformative breakthroughs in healthcare.”

With the surge in spatial omics data, there is a pressing need for more sophisticated computational tools for data analysis, leading Tan and his team to develop CelloType. The model leverages a type of AI in the form of transformer-based deep learning. Deep learning automates the analysis of high-dimensional data, enabling the model to capture complex relationships and context. It is highly efficient for handling large-scale tasks like natural language processing and image analysis, subsequently learning patterns and making predictions or classifications. It is programmed to improve accuracy in cell detection, segmentation, and classification.

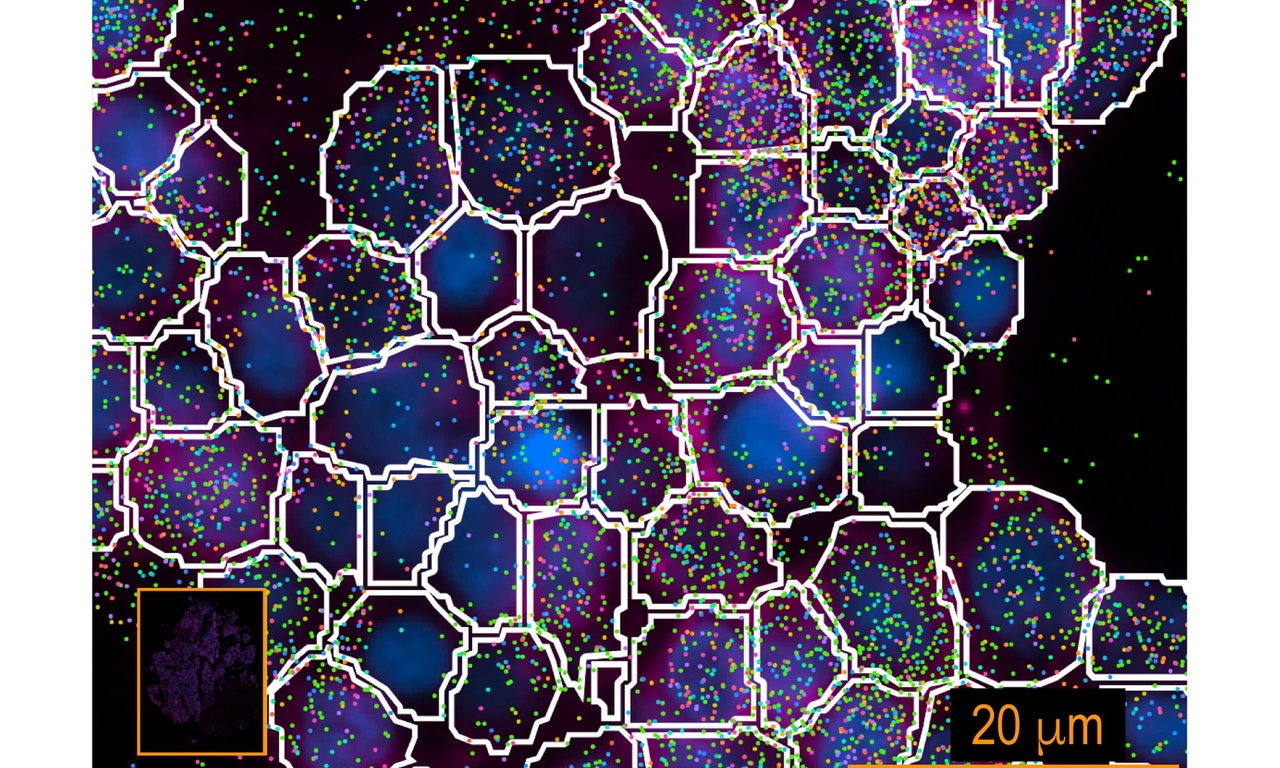

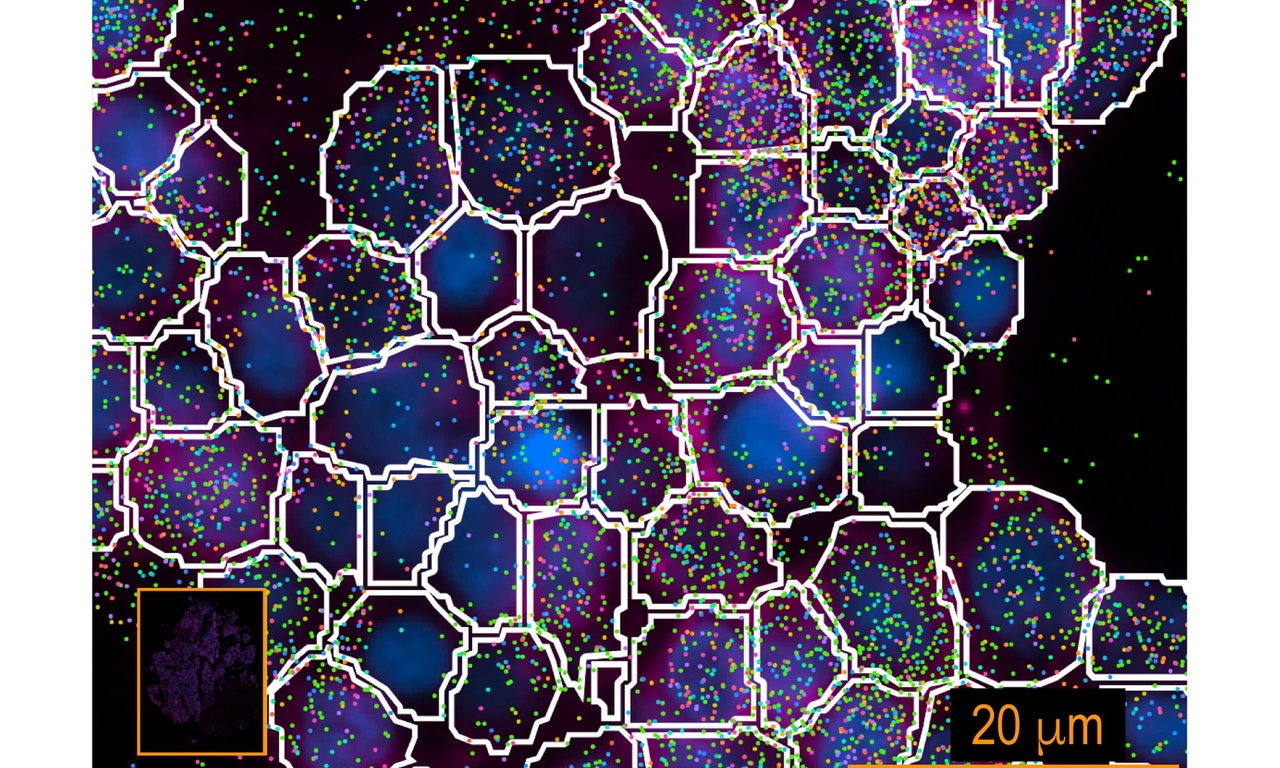

In this study, Tan and his team analyzed how CelloType performed compared with a range of traditional methods using animal and human tissue datasets. A typical two-stage approach involves segmentation followed by classification, which is inefficient and lacks accuracy. However, CelloType adopted a multi-task learning strategy that was more efficient because it simultaneously integrated segmentation and classification. CelloType also outperformed existing segmentation methods on various types of images, including natural images, bright light images and fluorescence images.

In terms of cell type classification, CelloType surpassed a model comprised of state-of-the-art methods for individual tasks and a high-performance instance segmentation model, which uses AI to precisely outline objects in an image. Using a multiplexed tissue image, an advanced biomedical image that displays multiple biomarkers within a single tissue sample, the researchers also demonstrated how CelloType can be used for multi-scale segmentation and classification of both cellular and non-cellular elements in a tissue. CelloType expedited this process, which identifies and separates varying size tissue elements within an image, allowing detailed analysis of both small and large cell structures.

“Our findings underscore the increasingly pivotal role technology plays in today’s biomedical research,” said Tan, who is also investigator in the Center for Childhood Cancer Research at CHOP. “CelloType advances spatial omics by providing a robust, scalable tool for analyzing complex tissue architectures, thereby expediting discoveries in cellular interactions, tissue function and disease mechanisms.”

Researchers outside of CHOP have free access to CelloType via open-source software in a public repository for noncommercial use.

This research was supported by the National Cancer Institute (NCI) Human Tumor Atlas Network grant under award #U2C CA233285 and the National Institutes of Health (NIH) Human Biomolecular Atlas Program grant under award #U54 HL165442.

Pang et al. “CelloType: A Unified Model for Segmentation and Classification of Tissue Images.” Nature Methods. Online November 22, 2024. DOI: 10.1038/s41592-024-02513-1.

Featured in this article

Specialties & Programs

Research

Researchers at Children’s Hospital of Philadelphia (CHOP) announced the creation of a new AI technology called CelloType, a comprehensive model designed to more accurately identify and classify cells in high-content tissue images. The findings were published today in the journal Nature Methods.

Spatial omics is a field of study that combines molecular profiling, such as genomics, transcriptomics or proteomics, with spatial information to map where different molecules are located within cells in complex tissues. It provides important, detailed insights into how a disease develops and progresses at the cellular level, aiding the advancement of precise diagnostics and targeted treatments, a major focus of CHOP’s translational research. This process allows researchers to study a broad range of complex diseases, such as cancer and chronic kidney disease, by revealing how cellular interactions and microenvironments contribute to disease progression and therapy response. As the critical first step of spatial omics data analysis, researchers undertake the tasks of cell segmentation (identifying cell boundaries) and classification (calling cell types).

Recent advancements in spatial omics technologies have led to analysis of intact tissues at the cellular level, allowing for unparalleled insights into the link between cellular architecture and functionality of various tissues and organs. Along these lines, CHOP is currently a collaborator in high profile projects such as the Human Tumor Atlas Network, the Human BioMolecular Atlas Program (HuBMAP), and the BRAIN initiative, which use similar technologies to map spatial organizations of various types of healthy and diseased tissues.

“We are just beginning to unlock the potential of this technology,” said Kai Tan, PhD, the study’s lead author and a professor in the Department of Pediatrics at CHOP. “This approach could redefine how we understand complex tissues at the cellular level, paving the way for transformative breakthroughs in healthcare.”

With the surge in spatial omics data, there is a pressing need for more sophisticated computational tools for data analysis, leading Tan and his team to develop CelloType. The model leverages a type of AI in the form of transformer-based deep learning. Deep learning automates the analysis of high-dimensional data, enabling the model to capture complex relationships and context. It is highly efficient for handling large-scale tasks like natural language processing and image analysis, subsequently learning patterns and making predictions or classifications. It is programmed to improve accuracy in cell detection, segmentation, and classification.

In this study, Tan and his team analyzed how CelloType performed compared with a range of traditional methods using animal and human tissue datasets. A typical two-stage approach involves segmentation followed by classification, which is inefficient and lacks accuracy. However, CelloType adopted a multi-task learning strategy that was more efficient because it simultaneously integrated segmentation and classification. CelloType also outperformed existing segmentation methods on various types of images, including natural images, bright light images and fluorescence images.

In terms of cell type classification, CelloType surpassed a model comprised of state-of-the-art methods for individual tasks and a high-performance instance segmentation model, which uses AI to precisely outline objects in an image. Using a multiplexed tissue image, an advanced biomedical image that displays multiple biomarkers within a single tissue sample, the researchers also demonstrated how CelloType can be used for multi-scale segmentation and classification of both cellular and non-cellular elements in a tissue. CelloType expedited this process, which identifies and separates varying size tissue elements within an image, allowing detailed analysis of both small and large cell structures.

“Our findings underscore the increasingly pivotal role technology plays in today’s biomedical research,” said Tan, who is also investigator in the Center for Childhood Cancer Research at CHOP. “CelloType advances spatial omics by providing a robust, scalable tool for analyzing complex tissue architectures, thereby expediting discoveries in cellular interactions, tissue function and disease mechanisms.”

Researchers outside of CHOP have free access to CelloType via open-source software in a public repository for noncommercial use.

This research was supported by the National Cancer Institute (NCI) Human Tumor Atlas Network grant under award #U2C CA233285 and the National Institutes of Health (NIH) Human Biomolecular Atlas Program grant under award #U54 HL165442.

Pang et al. “CelloType: A Unified Model for Segmentation and Classification of Tissue Images.” Nature Methods. Online November 22, 2024. DOI: 10.1038/s41592-024-02513-1.

Contact us

Jennifer Lee

CHOP Omics Initiative